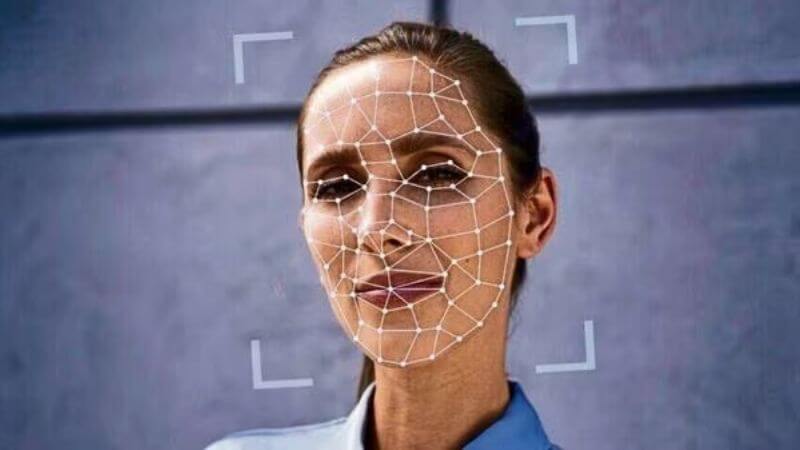

The era of unchecked deepfakes in India is about to change. From February 20, every AI-generated video, synthetic audio clip, or deepfake image on social media must clearly say it’s not real. For the first time, the government has brought artificial intelligence content directly under regulatory oversight. And the new rules come with sharp deadlines and serious consequences.

Signed by Joint Secretary Ajit Kumar, the gazette notification G.S.R. 120 (E) amends the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules. What’s changed? Platforms now have to make sure users can instantly spot AI-generated content. And if something violates the law, companies like Meta and Google have as little as three hours to pull it down, not the 36 hours they had before.

The rules also require platforms to bake in metadata and unique identifiers into synthetic content so authorities can trace where it came from, even if it gets shared a hundred times across different apps.

What Counts As AI Content?

The notification defines “synthetically generated information” as any audio, video, or visual that’s been cooked up or heavily altered by a computer to look like real people or real events. But here’s what doesn’t count: routine stuff like adjusting brightness, compressing a file, fixing audio noise, or translating text. As long as you’re not changing what the content actually means, you’re in the clear.

Research papers get a pass, too. So do training materials, presentations, PDFs, and draft documents that use stock images or placeholder content. The government’s clearly trying to go after misleading deepfakes and synthetic media that could fool people, not someone color-correcting a wedding video.

Big Tech Faces New Compliance Headaches

The real pressure lands on major platforms. Under the new Rule 4(1A), before you upload anything, Instagram, YouTube, and Facebook will have to ask: Did you use AI to make this? But they can’t just take your word for it. They’re also supposed to run automated checks, looking at file format, metadata, and source info to verify whether content is synthetic or not.

If it is, the platform has to slap on a disclosure tag. And once that tag’s there, nobody can mess with it. It can’t be deleted, hidden, or shrunk down to where nobody notices.

Platforms that knowingly let fake content slide lose their safe harbour protections. That’s a big deal because it opens them up to legal liability for what users post. There’s a small win here, though. The government dropped an earlier proposal from October 2025 that wanted watermarks covering at least 10% of the screen. IAMAI (which represents Google, Meta, Amazon, and others) pushed back hard, saying it was impossible to implement across every format. That demand’s gone now, though the labelling requirement remains.

Three-Hour Window, Down From 36

Forget the old 36-hour deadline. Platforms now have three hours to respond to certain lawful takedown orders. Other timelines have been reduced to 12 hours. This applies particularly to content that crosses into criminal territory: child s*xual abuse material, obscene content, fake records, explosives-related stuff, or deepfakes impersonating real people.

The rules tie synthetic content directly to laws like the Bharatiya Nyaya Sanhita, POCSO Act, and the Explosive Substances Act. Translation: If your deepfake breaks one of these laws, platforms have to move fast, and you could face serious legal consequences.

What Platforms Must Do Beyond Labelling?

There’s more than just sticking a label on AI content. Platforms have to embed metadata and unique IDs into every piece of synthetic media. Think of it like a digital fingerprint that follows the content wherever it goes. That way, even if a deepfake gets reuploaded or shared across WhatsApp, Telegram, and X, authorities can still trace it back to the source.

Platforms also need to run regular awareness campaigns. At least once every three months, they have to warn users in English and languages listed in the Eighth Schedule of the Constitution about what happens if you misuse AI content. The goal is to keep people informed about the penalties so they think twice before posting misleading synthetic media.

On the flip side, the government has promised that taking down synthetic content under these rules won’t strip platforms of their Section 79 Safe Harbour protection under the IT Act. That’s important because safe harbour is what keeps platforms from being held liable for every random thing a user posts, as long as they’re following due diligence norms.

Conclusion

This isn’t just another tech policy tweak. It’s a clear signal that India is stepping into the AI era with guardrails. Deepfakes and synthetic media can entertain, educate, and innovate. But when they mislead or harm, the consequences can be massive. The new rules shift responsibility directly onto platforms and creators alike. From February 20 onward, if it’s fake, it must say so. And if it crosses the line, it disappears fast.

Follow Us: Facebook | Instagram | X |

Youtube | Pinterest | Google News |

Entertales is on YouTube; click here to subscribe for the latest videos and updates.